Chapter 2 Scientific Literature

This chapter examines the role of research involving the scientific literature and patent analytics. Analysis of scientific literature is a specialised field in its own right in the form of bibliometrics or scientometrics with its own specialist journals such as Scientometrics and other publications (An Introduction to Bibliometrics 2018). These fields cover a wide range of topics involving statistical analysis of scientific literature such as indicators for science and technology, exploration of the impacts of scientific research, research networks, and the mobility of researchers. These field are characterised by a combination of qualitative and quantitative methods and are frequently oriented towards the understanding of trends in science and technology to inform research and innovation policies. The relationship between science and technological innovation is an important focus of research and links analysis of the scientific literature with patent literature. This chapter focuses on how analysis of the scientific literature can inform patent analytics in three main ways:

- By informing search strategies;

- By identifying actors who are active inside or outside the scientific literature as part of landscape analysis;

- Identifying potential opportunities for economic development to address the needs of developing countries.

A key historic constraint in the ability to develop patent analysis informed by the scientific literature has been the lack of access to scientific data at scale. This situation is changing radically as a result of efforts to make metadata on scientific publications publicly accessible. The prime example of this is OpenAlex from Our Research as the replacement for Microsoft Academic Graph, that builds on work by organisations such as Crossref, The Directory of Open Access Journals to make data openly accessible. Other important initiative include the work of PubMed in making publicly funded research in the medical and biosciences openly accessible and CORE which makes the full texts of scientific publications openly accessible.

We will begin with a brief overview of ways to access the scientific literature before turning to a discussion of scientific data fields using data from Clarivate Analytics Web of Science as an example. We will then explore how the scientific literature can be used to inform search strategies through the identification of terms from the scientific literature for use in patent searches. We will then look at methods for matching actors from the scientific literature into the patent literature using data from ASEAN countries as an example. Finally, we will look at how comparisons between the scientific literature and the patent literature can assist developing countries with identifying opportunities for economic development to address their needs.

2.1 Accessing the Scientific Literature

The main means for accessing the scientific literature is through databases of scientific literature and increasingly through open access databases using web services of application programming interfaces (APIs).

Researchers based in Universities will generally be familiar with two of the largest of the commercial databases of the scientific literature, Web of Science/Web of Knowledge from Clarivate Analytics or Elsevier’s Scopus. Open access databases such as PubMed and Crossref (containing metadata on over 96 million publications) are increasingly popular and link to initiatives such as core.ac.uk that, at the time of writing, make the full texts of over 113 million publications publicly available. Databases such as Google Scholar are a popular open access source of information on the scientific literature and access to copies of texts while social network sites for researchers such as Research Gate provides a means for scholars to share their research and create shared projects. An important feature of recent developments in scientific publication is a shift in emphasis towards open access publications on the part of researchers and funding agencies. This is reflected in services such as core.ac.uk noted above and in services such as Unpaywall which provides a browser plugin to identify open access versions of articles. At present Unpaywall contains links to over 19 million scientific publications. An important aspect of this shift in emphasis towards open access is cross service integration. Thus Unpaywall is based on and resolves article identifiers to the content of Crossref while the commercial Web of Science database provides links to Unpaywall in its results to allow free retrieval of articles. Other important emerging services include tools such as Open Academic Graph which provides access to meta data on over 330 million publications.

As this makes clear the landscape for accessing scientific literature is changing as a result of the rise of web service enabled database and cross-service integration tools. In practical terms this means that access to the scientific literature is no longer entirely dependent on fee based databases.

It is important to emphasise that publication databases normally have strengths and weaknesses in terms of:

- Coverage of journals, books and other publications

- The languages covered and availability of translations

- The range of fields available for analysis (authors, affiliations, titles, abstracts etc.)

- The basis of any statistical counts (e.g. counts of citing articles)

- The number of records that can be downloaded

- The format in which records can be downloaded

These issues impose constraints on what can be searched and downloaded from scientific databases. For example, in our experience Web of Science permits for the downloaded of a wider range of data fields than Scopus, while open access databases enjoy the advantage of being free but are more limited in terms of the data fields that are available and the consistency of coverage, such as abstracts.

When seeking to carry out literature research as part of a wider patent analytics project it is therefore important to consider the strengths and weaknesses of particular databases and to use multiple sources where necessary.

2.2 Searching Literature Databases

2.2.1 Stemming

When searching a literature database it is important as a first step to understand the available search fields and search operators (such as OR and AND). Many databases now offer what is called word “stemming” that will look for similar words or phrases based on the root of the terms used during input… for example if we input the word “drone”, a stemmed version based on the root “drone” would include words like “drones”, “droned” and “droning”. In technical terms words like “drones”, “droned” and “droning” are lemmas.

Word stemming is a powerful tool for expanding the range of searches and can be extended to using synonyms. Specialist tools such as WordNet, a lexical database of English words and synonyms, can be used to identify synonyms on a search term (Fellbaum 2015). WordNet can be used in a range of programming languages or using the free online tool. The results of a search of WordNet for the word Drone are presented below:

Noun

- S: (n) drone (stingless male bee in a colony of social bees (especially honeybees) whose sole function is to mate with the queen)

- S: (n) monotone, drone, droning (an unchanging intonation)

- S: (n) dawdler, drone, laggard, lagger, trailer, poke (someone who takes more time than necessary; someone who lags behind)

- S: (n) drone, pilotless aircraft, radio-controlled aircraft (an aircraft without a pilot that is operated by remote control)

- S: (n) drone, drone pipe, bourdon (a pipe of the bagpipe that is tuned to produce a single continuous tone)

Verb

- S: (v) drone (make a monotonous low dull sound) “The harmonium was droning on”

- S: (v) drone, drone on (talk in a monotonous voice)

The use of a stemming tool helps to reveal the range of possible uses of a search term. In the case of the word drone we can see references to bees, to sound, to a part of a musical instrument and for pilotless aircraft.

The range of the uses of these terms suggests a need for caution. Thus, the use of the term drone in a scientific database is likely to return results on all of these potential uses of the work drone. Stemming algorithms can both aid and hinder information retrieval. For example, if stemming is automatically turned on then the word “droning” would automatically be included and thus populate the results with data on the irrelevant subject of sound for those interested in drone technology. In contrast, where the stemming tool displays synonyms we might wish to include pilotless aircraft in the original search.

In practice, when initiating a search of a database of the scientific literature on an unfamiliar subject it is generally best to turn off stemming and to focus on downloading a test set of results for review. The aim here is to use a limited set of terms to identify other potentially relevant terms and terms that can be excluded from a sample.

This approach can be used with a wide range of software tools, including simple tools such as Excel or free online tools. The objective is to take available fields such as the title, abstract, and author keywords and to break them down into their constituent words and phrases. This is a process known as tokenizing text fields into words, phrases (ngrams), sentences and paragraphs of various lengths and is a fundamental feature of computational linguistics, text mining and machine learning [refs]. We will look in greater detail at text mining in Chapter 6.

One powerful tool in working with both scientific and patent data is VantagePoint from Search Technology Inc. VantagePoint is available in a student edition and 32 and 64 bit versions for Windows. VantagePoint is able to import a wide range of different data sources and automatically tokenize text fields into words and phrases.

Table 2.1 below presents the combined top 50 terms in the titles, abstracts, and author keywords from a search of Web of Science for the words drone or drones between 2010 and 2017.

| records | terms | multi_word |

|---|---|---|

| 479 | drones | 0 |

| 241 | drone | 0 |

| 240 | results | 0 |

| 181 | study | 0 |

| 170 | use | 0 |

| 141 | article | 0 |

| 126 | rights reserved | 1 |

| 91 | number | 0 |

| 89 | queens | 0 |

| 88 | development | 0 |

| 87 | workers | 0 |

| 86 | Apis mellifera | 1 |

| 85 | data | 0 |

| 85 | UAV | 0 |

| 84 | one | 0 |

| 81 | colonies | 0 |

| 69 | analysis | 0 |

| 62 | time | 0 |

| 60 | first | 0 |

| 56 | effects | 0 |

When we inspect the results in Table 2.1 we see a full list of words and phrases. Many of these will not be relevant to drones as such, for example the words article or study and common noisy terms such as “and, or, of, for” are commonly excluded as stop words. In other cases references to the word “queens” or its root “queen” along with “Apis mellifera” and “colonies” suggests that we have a lot of data on bees somewhere in the data. In our next iteration of the search we would probably want to explicitly exclude these terms from the search. However we can also detect other words that we might want to include in our search such as “UAV” for Unmanned Aerial Vehicle.

In practice multi word phrases (ngrams) commonly express concepts and bring us closer to the terms that we will want to use in a search. Table 2.2 ranks the data based on multi-word phrases.

| records | terms | multi_word |

|---|---|---|

| 126 | rights reserved | 1 |

| 86 | Apis mellifera | 1 |

| 50 | honey bee | 1 |

| 49 | unmanned aerial vehicles | 1 |

| 44 | honey bees | 1 |

| 42 | Unmanned Aerial Vehicles (UAVs) | 1 |

| 40 | United States | 1 |

| 32 | remote sensing | 1 |

| 29 | unmanned aerial vehicle (UAV) | 1 |

| 28 | unmanned aerial vehicle | 1 |

| 28 | Varroa destructor | 1 |

| 25 | drone strikes | 1 |

| 24 | recent years | 1 |

| 23 | Elsevier Ltd | 1 |

| 22 | experimental results | 1 |

| 20 | drone warfare | 1 |

| 20 | present study | 1 |

| 479 | drones | 0 |

| 241 | drone | 0 |

| 240 | results | 0 |

Table 2.2 reveals irrelevant phrases such as “honey bee” and its plural “honey bees” along with Varroa destructor, a mite that parisitises bees. We also observe the prevalence of unmanned aerial vehicles and their plurals linked to the term UAV and applications of drone technology such as “drone warfare” and “drone strikes”.

A review of the terms captured in initial exploratory research can thus go a long way to refining a search strategy without a huge investment in time or software tools.

2.2.2 Using Search Operators

Many databases include options for operator based searching. The common operators are: OR, AND and NOT.

this OR that this AND that this NOT that

The OR operator is an open operator for example we could search for

drone OR drones OR droning

This will locate texts that contain any of these terms. If we wanted to restrict the search to those that contain all of the terms we would use AND.

drone AND drones AND droning

Note that this is a more restrictive form of search because the documents must contain all three words.

In contrast, if we were interested in drone technology and not in other uses of the word drone such as in musical instruments we would use NOT.

drone OR drones NOT droning

This would only return documents where the search terms drone or droning appeared without the word droning. Another perhaps more precise strategy would be to also exclude music.

drone OR drones NOT (bee OR bees OR “Apis mellifera” OR “honey” OR droning OR music)

The parentheses in the above search query are important because they specify that documents containing either be or bees etc. should be excluded from the results. The use of NOT based searching is a powerful way of excluding irrelevant documents.

Boolean operators are extremely important when constructing search terms and may be expressed in other ways if using databases programmatically. For example in R both | and || mean OR and & or && means AND. In contrast Python uses logical OR, logical AND, logical NOT. A growing number of databases are powered by the Java based Apache Lucene or Solr (now best known perhaps from the widely used Elasticsearch). In Lucene and Solr in addition to the standard operators there is also “+” which specifies that a document must contain a term and may contain another term.

+drone “unmanned aerial vehicle”

To force both to appear we would use or the regular AND.

+drone +“unmanned aerial vehicle”

Note that the parentheses in the above version are important because it articulates that one or the other term should be excluded from the results. For more advanced programmatic uses, for example, using Elasticsearch or the PatentsView API you will frequently need to construct queries using the popular JavaScript Object Notation language (JSON). For example, the popular Lens patent database from Cambia in Australia offers a public interface and an API powered by Elasticsearch. Use of the API requires familiarisation with the construction of queries written in JSON. While this can be intimidating at first, worked examples, such as those provided in the Lens API documentation and the Elasticsearch documentation provide a set of signposts to writing your own queries for programmatic use with APIs.

2.2.3 Proximity Operators

Proximity operators focus on the distance between words in a search term for example the operator NEAR with Web of Science allows the user to specify the distance between words such as (drone OR drone) NEAR/10 droning would find texts containing the word drone or drone within 10 words of the word droning. Another option, again from Web of Science is SAME. This is used in searches of the author affiliation field to treat two words as the same during search such as in the address field AD=(McGill Univ SAME Quebec SAME Canada). This search will treat the word Quebec and Canada as the same.

Proximity operators can provide powerful tools for targeting a search of the scientific literature. However, when preparing to develop your search it is important to check the default settings used by the database and whether that meets your needs. In addition, it is important to note the operators that are available and the form that is expected. These will typically vary across the different databases. For example, many databases turn on stemming by default, use AND (rather than OR) as the default Boolean and may use ADJ (adjacent) as the default operator. Checking these settings at the beginning is important for avoiding confusing results when trying to establish a clear baseline search and in particular when working across multiple data sources.

2.2.4 Regular Expressions

The use of regular expressions will be covered in greater detail in the discussion of text mining. However, it is worth noting that common regular expressions that you may be able to use in a literature databases include

^ starts with

* any character wildcard. For example dron* would capture drone, drones, droned, droning etc. This can be used at the beginning, middle or the end of a term but is commonly used at the end. The wildcard should be used with caution. For example, a search for genomics related literature using the root genom and the wild card genom* will capture a potentially large number of results for the common German word genommen (took).

$ ends with. For example drone$ would exclude drones and other close terms, however it would capture terms containing drone such as the Italian word “androne” (meaning entrance or entrance hall). As such, beware of unexpected results.

Regular expressions can be combined in a whole variety of ways. One of the most useful is exact matching.

^drone$ This will exactly match the word drone and no other term.

Very basic engagement with regular expressions is a powerful tool and it is well worth learning the basics. A good place to start is the long standing Regular Expressions Tutorial. However, be warned that regular expressions can become complex and difficult to understand quite rapidly. A well known quote about regular expressions is attributed to former Netscape Engineer Jamie Zawinski in a Usenet discussion group from 1997.

Some people, when confronted with a problem, think “I know, I’ll use regular expressions.” Now they have two problems. 1

The point that is being made here is that regular expressions should not be the tool of first resort for every problem. Regular expressions can rapidly become very complex and difficult for a reader, including its author, to understand. Having said this a basic understanding of regular expressions is a very important part of the patent analysts toolbox as it allows you to precisely control what you are searching for and to parse the results. Tokenization of texts discussed above is an example of this that is typically based on word boundary matches (such as \\b) while named entity recognition in texts is often based on the identification of capitalized terms such as ^[[:upper]] or the equivalent ^[A-Z] to identify proper nouns (which are marked by the use of capital letters at the start of the word) such as people, place and other entity names. The precise form of a regular expression often depends on the language being used with Open Refine’s GREL or Google Regular Expression Language providing a nice practical introduction to using regular expressions as discussed in Chapter 8 of the WIPO Manual on Open Source Patent Analytics. Programming languages such as R include dedicated packages such as stringr that make it easier to work with regular expressions and cheat sheets have been developed to assist in remembering regular expressions.2. Websites such as the Regular Expressions Tutorial and https://regex101.com/ allow you to test out regular expressions in a range of different programming languages.

2.3 Precision vs. Recall

At the end of the testing phase with scientific literature a set of new candidate terms and exclusion terms is the desirable outcome. For example, a more refined approach to the development of a search query for drone technology might look something like this.

“drone” OR “drones” OR “UAV” OR “UAVs” OR “Unmanned Aerial Vehicle” OR “Unmanned Aerial Vehicles” OR “Pilotless Aircraft” NOT (“bee” OR “bees” OR “Apis mellifera” OR “honey” OR “droning” OR “music”)

The use of the quotation marks in this case is intended to prevent a database from stemming the individual terms and the effect is to increase the level of precision in the inclusion and exclusion of terms. While it would be possible to modify this in a variety of ways using the wildcard or boundary markers, the importance of this type of approach is that it is simple, transparent, easy to reproduce and easy to modify in a way that can be tested.

The discussion above is linked to a much larger body of literature on the distinction between Precision and Recall in information theory. For a literature database an example of this would be entering in a set of terms where the database returns 30 pages on drones that carry pizza boxes of which only 20 are relevant but fails to return the other 40 relevant documents. That is a precision rate of 20/30 = 2/3 as only 2 thirds of the returned documents are on topic. In contrast the recall rate is 20/60 or 1/3 because the database only returned a third of the actual relevant documents.3 The first measure is about the accuracy of the results and the second is about the completeness of the results.

In practice precision vs. recall is about striking a good balance between accuracy (precision) and recall (completeness). One strategy for dealing with this is to start by favouring completeness by attempting to capture the universe of things relating to a topic and then filtering the data to arrive at more precise results to address the topic in question.

In the next section we will use examples from the 2019 WIPO Patent Landscape Report on Marine Genetic Resources that used exactly this strategy. The starting point for the research was to capture all scientific publications that contained an author from one of ten South East Asian countries or that contained a reference to the country in the title, abstract or author keywords of a publication. This aim of this approach was to capture the universe of things that needed to be captured. That universe proved to be 391,380 publications after filtering some of the larger country datasets on subject categories to reduce irrelevant subject areas. The second stage of this exercise involved text mining the titles, abstracts and author keywords for marine species names. This radically reduced the dataset to 6,659 scientific publications. As this makes clear, the use of this method can initially be costly in terms of the requirements of initial data retrieval but has the advantage of capturing the universe of relevant documents. On that basis the marine species in the data could be accurately targeted.

One very significant constraint when working with the scientific literature as opposed to patent data is that it is very rare that the full text of a scientific article is available for search. This has a major but not readily quantifiable impact on recall because the major body of the text is not readily accessible. This situation may change as databases such as CORE https://core.ac.uk/k make over 100 million full text scientific publications available for wider analysis.

Debates around precision and recall and related concepts such as relevance are important across a wide range of computer and information retrieval fields including, for example, text and image classification in machine learning approaches. As regular users of search engines, patent analysts like other regular users, will encounter the outcomes of underlying and possibly hidden decisions on the database side about how to handle the balance between precision and recall. This will affect the total number (recall) and precision of the results that are presented to the searcher. It is therefore important to understand that what we receive from a particular database is likely to reflect not only its content (coverage) but these underlying decisions about precision and recall. On balance, in the author’s opinion, for patent analytics it is generally better to favour precision in analysis while at the same time clearly situating that analysis in the often fuzzier context of broader activity. We will return to this topic in the discussion of machine learning.

2.4 Processing Scientific Literature

Databases of the scientific literature commonly return a range of different fields when data is downloaded. These can vary widely but will commonly include most of the following:

- Author Name

- Author Affiliation

- Title

- Abstract

- Author Keywords

- Document Identifier (e.g. doi, issn, isbn, openalexid)

- Funding Acknowledgements (limited coverage)

- Cited references

- Citation counts (documents citing the reference document)

- Subject categories or Concepts (derived)

- Researcher identifiers (ORCID, Researcher ID, PubMed Id, openalexid, other ID)

This data is mainly extracted from the front page of publication or, in the case of references, the end of the document. However, subject category or concepts (e.g. for OpenAlex) are commonly added by the publication database itself. In some cases, such as ISI Web of Science, the classification of subject categories is based on classification of the subject areas covered by a journal and not the individual article. In contrast, building on the work of Microsoft Academic Graph, OpenAlex employs a machine learning based multi-label classification of individual articles (Wang et al. 2020). There are likely to be strengths and weaknesses to each of these approaches. At the time of writing, research in scientometrics and bibliometrics has been dominated by the use of journal subject areas as the basis for subject categories . More recent approaches focusing on article level classification embodied in OpenAlex may well prove superior but, to our knowledge, have yet to be evaluated. We will focus here on Web of Science.

At the time of writing Web of Science used 252 Subject Categories and Scopus groups journals into 4 broad subject areas and 334 fields.4. A journal may be classified in more than one subject area with some such as Science, Nature and PLOS classified as interdisciplinary. The use of subject categories combined with citation analysis has been central to initiatives in the scientometrics community to develop maps of science Bollen et al. (2009b) including online interactive maps such as the https://www.scimagojr.com/shapeofscience/ using Scopus data with a gallery of maps on the structure of science from a range of sources made available through the http://scimaps.org/.

The processing of scientific literature follows a pattern that is very similar to patent data as discussed in Chapter 4. These steps can be described as follows:

- Deduplicate the records using document identifiers (such as Web of Science ISI Unique Identifier, Lens Ids, OpenAlex ids or equivalent) to ensure that no records is over counted.

- Review the dataset for noise and exclude noise as required.

- Clean author names

- Clean affiliation/organisation names

- Clean funding information to focus on funding organisations

- Visualise the data

The deduplication of the data is important to avoid over counting and can readily be achieved using document identifiers. Note that the best source for this is often the internal identifiers used by the databases as they are guaranteed to have 100% coverage unlike the doi field which is normally confined to journal articles.

The exclusion of noise from the dataset will commonly involve reviewing the data by subject category (or concepts in the case of databases using OpenAlex). Table 2.3 below displays the top subject categories in a sample of 1400 publications for the term drone or drones from Web of Science.

| Records | Subject Category | keep | review | exclude |

|---|---|---|---|---|

| 150 | Entomology | 0 | 0 | 1 |

| 136 | Multidisciplinary Sciences | 0 | 1 | 0 |

| 112 | International Relations | 1 | 0 | 0 |

| 100 | Engineering, Electrical & Electronic | 1 | 0 | 0 |

| 82 | Political Science | 1 | 0 | 0 |

| 70 | Law | 1 | 0 | 0 |

| 64 | Telecommunications | 1 | 0 | 0 |

| 58 | Ecology | 0 | 1 | 0 |

| 52 | Environmental Sciences | 0 | 1 | 0 |

| 46 | Zoology | 0 | 0 | 1 |

| 43 | Remote Sensing | 1 | 0 | 0 |

| 40 | Engineering, Aerospace | 1 | 0 | 0 |

| 38 | Robotics | 1 | 0 | 0 |

| 37 | Biology | 0 | 1 | 0 |

| 36 | Computer Science, Information Systems | 1 | 0 | 0 |

Here we can see that we have a significant number of publications in Entomology, and Zoology that are highly likely to be about bees rather than drone technology. When using VantagePoint or other tools including Excel one approach to data cleaning is to create three groupings to assist with the review.

- Keep

- Review

- Exclude

In working through the data each record should be placed on one and only one of the groups. The review grouping is important because it allow you to maintain a focus on the keep and exclude groups and placing anything that is hard to decide about into Review.

In the case of journal subject categories the review group is important because journal subject categories are somewhat crude. For example, Multidisciplinary sciences will include publications on drone technology and on bees. Agriculture related subjects are also a likely review category because bees and drone technology may appear in this category, for example for monitoring fields. The purpose of the review group is to allow time to view the records in particular categories with the aim of allocating all records to either keep or exclude at the end of this process.

When working with data such as subject categories or concepts, the best place to start looking for noise is at very high frequency or very low frequency scores (the head and tail of data ranked by number of records). However, a complementary second step is to look at the sources of the publications. A sample of this data for drone technology from Web of Science is presented in Table 2.4.

| Records | Source Title | keep | review | exclude |

|---|---|---|---|---|

| 49 | NEW SCIENTIST | 0 | 1 | 0 |

| 40 | APIDOLOGIE | 0 | 0 | 1 |

| 26 | PLOS ONE | 0 | 1 | 0 |

| 23 | JOURNAL OF APICULTURAL RESEARCH | 0 | 0 | 1 |

| 20 | SENSORS | 1 | 0 | 0 |

| 18 | AEROSPACE AMERICA | 1 | 0 | 0 |

| 17 | FOREIGN AFFAIRS | 1 | 0 | 0 |

| 17 | REMOTE SENSING | 1 | 0 | 0 |

| 15 | INTERNATIONAL AFFAIRS | 1 | 0 | 0 |

| 14 | IEEE SPECTRUM | 1 | 0 | 0 |

| 13 | JOURNAL OF APICULTURAL SCIENCE | 0 | 0 | 1 |

| 12 | ETHICS & INTERNATIONAL AFFAIRS | 1 | 0 | 0 |

| 12 | JOURNAL OF INTELLIGENT & ROBOTIC SYSTEMS | 1 | 0 | 0 |

| 12 | NEW YORK REVIEW OF BOOKS | 1 | 0 | 0 |

| 11 | INSECTES SOCIAUX | 0 | 0 | 1 |

| 11 | NATION | 1 | 0 | 0 |

| 11 | SCIENTIFIC REPORTS | 0 | 1 | 0 |

| 10 | CHEMICAL & ENGINEERING NEWS | 1 | 0 | 0 |

| 10 | COMPUTER LAW & SECURITY REVIEW | 1 | 0 | 0 |

| 10 | JOURNAL OF ECONOMIC ENTOMOLOGY | 0 | 0 | 1 |

| 10 | JOURNAL OF EXPERIMENTAL BIOLOGY | 0 | 0 | 1 |

| 9 | SCIENCE | 0 | 1 | 0 |

| 9 | SECURITY DIALOGUE | 1 | 0 | 0 |

| 8 | BULLETIN OF THE ATOMIC SCIENTISTS | 1 | 0 | 0 |

| 8 | INTERNATIONAL JOURNAL OF REMOTE SENSING | 1 | 0 | 0 |

In this case we can see that publications such as Apidologie can readily be excluded where as journals such as PLOS ONE that publish across a range of fields would require review. We can also see that a number of social science and humanities subjects are entering into the picture and depending on our purpose we might want to focus publications down to those relating to remote sensing, engineering and related subjects.

As part of this review process it is important not to second guess the technology area. For example, we should not assume that everything associated with biology should be excluded. Biomimicry is for example an important area of inspiration in some areas of drone technology (such as swarming behaviour) while some publications that refer to drones and biology refer to the use of drone technology in anti-poaching and conservation biology. It is precisely because of the lack of predictability of new and emerging areas of technology that an approach concentrating initially on recall and then on precision is often the most successful route to accurate analytics. The alternative is for analysts to impose a definition of a new technology area on the field of research and thus potentially exclude important features of the technology field and debates around those fields (such as military drone strikes).

The outcome of this review process is that each record falls into a keep or exclude category and a smaller dataset is generated containing the data the analyst wishes to keep. At this stage the main body of data cleaning focusing on author organisations (in the author affiliation) and author names along with the text in funding acknowledgements can begin.

The basic procedure for name cleaning has been described in Chapter 8 of the WIPO Manual on Open Source Patent Analytics using the free Open Refine software tool. However, accuracy in name cleaning is best achieved using multiple match criteria to address cases where an author shares a name with another author but is a distinct person. VantagePoint from Search Technology Inc provides a means to achieve this by linking a fuzzy logic name cleaning algorithm that clusters names based on similarity scores with a setting that allows another field to be used to match the data. That is a search for John Smith that is run without match criteria will group different John Smiths together. A clean up that is run by grouping John Smiths using the author affiliation will distinguish between John Smiths working at say the University of California or John Smiths working at London University (Carley, Porter, and Youtie 2019).

As this example also suggests name cleaning is often a multi-step process because in reality multiple John Smiths may work at the University of California. In that case a second step might be to use shared co-authors or subject categories as a basis for decision making using the keep, review, exclude method described above. The same approach is then applied to the applicant organisation where particular attention is required to organisations that share similar names but are distinct entities. Thus, Washington University and the University of Washington are distinct entities. When cleaning organisation names note that decisions need to be made on how to address regional and international organisations and to provide notes in the resulting report or publication on decision-making to inform the reader.

In considering the clean up process for author names described above note that it is often easier to begin by cleaning up the author affiliation names and then to clean author names using the cleaned organisation names as the match criteria.

An important development in recent years has been the increasing use of author identifiers in publication records. A number of author identifier systems exist such as Researcher ID from Web of Science or Scopus ID and PubMed ID but the most important of these is ORCID which is a non-profit open access researcher identifier system. Where a researcher identifier is available these identifiers can be used to cluster variations of names with a degree of certainty that they are the same person or that persons with the same name with distinct ORCID IDs will in fact be distinct persons. At a higher level of detail ORCID ID public profiles can be looked up online to assist with assessing whether a researcher listed as belonging to one institution has moved to another. Cases of author movement will frequently involve a researcher working in the same area of research but listing more than one affiliation. ORCID identifiers help to resolve these cases. However, while ORCID may assist with addressing knotty author name disambiguation problems it is important to note that it is not perfect. For example, ORCIDs may be misassigned or a single person may end up with more than one ORCIC (for example one created by the author and another by the institution). This reveals that no approach to author name disambiguation will ever be perfect. More recent approaches to author identifies, notably OpenAlex building on author ids in Microsoft Academic Graph, merit further evaluation as efforts continue to improve disambiguation at scale at the database level.

Funding data is a relatively new feature in publication databases and the presence of this data, which commonly appears in the Acknowledgements field, can be spectacularly messy. For example:

The COLOSS (Prevention of honey bee COlony LOSSes) network aims to explain and prevent massive honey bee colony losses. It was funded through the COST Action FA0803. COST (European Cooperation in Science and Technology) is a unique means for European researchers to jointly develop their own ideas and new initiatives across all scientific disciplines through trans-European networking of nationally funded research activities. Based on a pan-European intergovernmental framework for cooperation in science and technology, COST has contributed since its creation more than 40 years ago to closing the gap between science, policy makers and society throughout Europe and beyond. COST is supported by the EU Seventh Framework Programme for research, technological development and demonstration activities (Official Journal L 412, 30 December 2006). The European Science Foundation as implementing agent of COST provides the COST Office through an EC Grant Agreement. The Council of the European Union provides the COST Secretariat. The COLOSS network is now supported by the Ricola Foundation - Nature & Culture.

Literature databases are attempting to parse relevant information from this data such as the name of the funder and the contract or award number with varying degrees of success as follows:

COST Action, FA0803 | EU Seventh Framework Programme, - | Ricola Foundation - Nature Culture, -

In considering the brief discussion of regular expressions above note the focus in the parsing of this data on Nouns and Proper Nouns and numeric entries. It is likely that dictionary based approaches and machine learning based name entity recognition are increasingly being applied to this type of problem. The Research Organization Registry (ROR) Community is directed towards the disambiguation of research organization names and releases regular updates of a standard and open access organisation register that is now included in OpenAlex and other databases (building on the earlier GRID initiative). While not directed explicitly to funding organisations, funding and other organisations (such as the European Commission) can appear in ROR entries and thus assist with cleaning up funding information.

An important challenge when dealing with funding information is determining whether data should be grouped or not. For example should funding from the European Commission under the Framework programmes and those under European regional or sectoral funds be grouped together? The answer to this question will depend in part on the level of detail required by the research. However, this can be very important when visualising data on funding, such as comparison between the EU and the US where a failure to aggregate at the right level may lead to inadvertent misrepresentation of the data. In general the approach taken, such as grouping all EU level funding together, should be made clear in an explanatory note to the reader when presenting the results of the data.

As noted above the cleaning of funding data is closely related with cleaning organisation names. The development of the ROR dataset, encompassing over 100,000 organisation names and related acronyms and variants is an important step forward. The inclusion of ROR entries in OpenAlex (as with the earlier GRID identifiers in Microsoft Academic Graph) takes away a significant portion of the pain of name cleaning for scientific organisations. However, in the case of OpenAlex and earlier MAG a significant portion of organisation names may not have an ROR id or not map to an existing name or variant. Cleaning of this data requires investigation of the raw organisation data (institutions in OpenAlex) and the use of tools such as VantagePoint. Nevertheless, the advantage of the increasing application of ROR ar the database level is that over time an increasing number of organisation names will be cleaned up and (as we will see below) will become available for other tasks such as geospatial mapping.

One important observation on cleaning data is to consider how detailed the cleaning operation needs to be. For example, if only the top ten or top 20 results will be shown to the reader it is important to ensure that person, organisation or funding organisation have been cleaned to capture all relevant name variants to ensure the accuracy of counts. Having said this, there is no point whatsoever expending energy cleaning data that will never be used or seen by someone else. Finding the right balance in name cleaning between ensuring accuracy of reporting and avoiding wasting time and effort is an important skill but will often depend on the requirements of the specific task and the data at hand.

We will return to the subject of name cleaning elsewhere in the handbook. However, in closing this discussion we would emphasise the importance of resources such as ROR in setting a standard for the use of names and the efforts of the USPTO PatentsView team in creating disambiguation tables for applicant and inventor names (available through the PatentsView data service for grants and applications or pregrants). These initiatives, despite their weaknesses, can save patent analyst many hours of work and readily lend themselves to use in automated routines (e.g. SQL table joins).

We now turn to some basic feature of visualisation of the scientific literature.

2.5 Visualizing the Scientific Literature

A wide range of options are available for visualising data from the scientific literature. Typically this will include basic data on trends, geographic distribution of records, subject areas, top ranking organisations and researchers. When working to visualize data it is a very good idea to become familiar with some of the excellent literature on this topic notably the classic book The Visual Display of Quantitative Information by Edward Tufte (1983) and the more recent Stephen Few (2012) Show Me the Numbers: Designing Tables and Graphs to Englighten.

To illustrate some approaches to visualising data from the scientific literature we will use data from the 2019 WIPO Patent Landscape Report on Marine Genetic Resources.

2.5.1 Dashboards

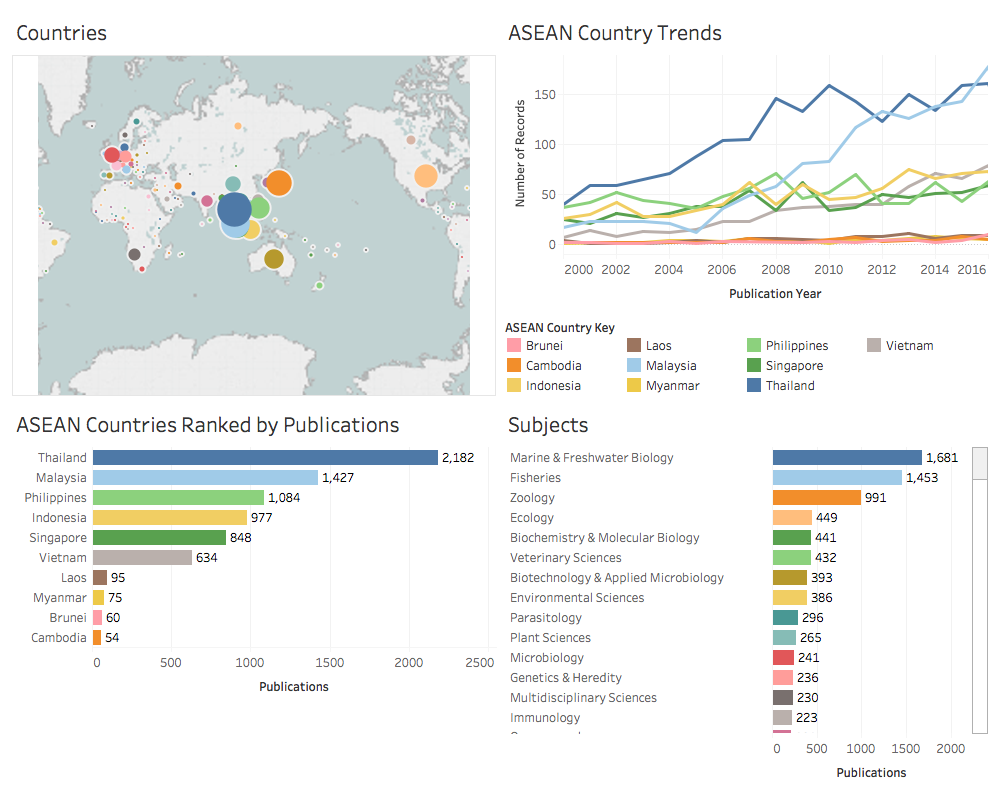

Dashboards are a powerful and popular way of summarising data. The creation of basic dashboards using Tableau is covered in detail in the Chapter 11 of the WIPO Manual on Open Source Patent Analytics. Figure 2.1 shows a summary of the overall data on scientific research on marine genetic resources in South East Asia.

Figure 2.1: Overview of Research on Marine Genetic Resources in ASEAN Countries

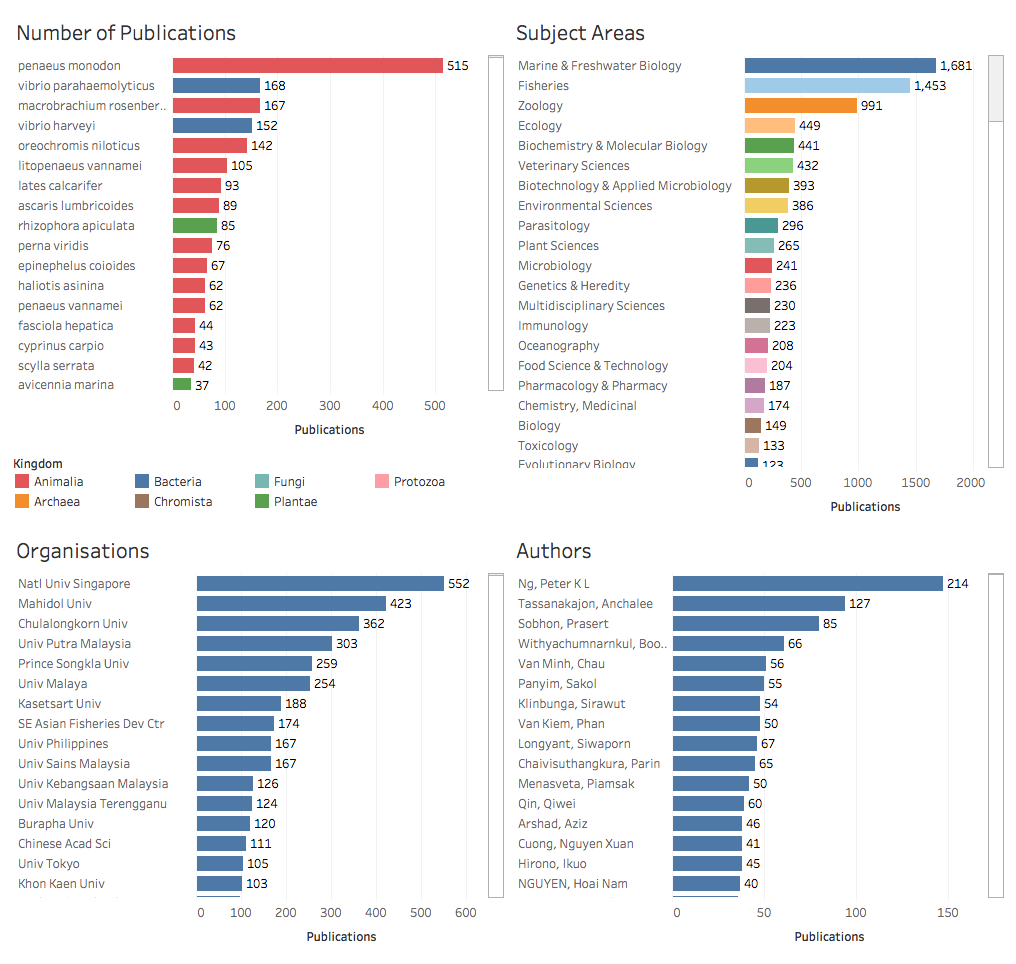

Figure 2.2 displays details of the species, subject areas, organisations and authors.

Figure 2.2: Overview of Marine Species, Organisations and Authors

The effect of the use of dashboards is to convey the principle factual information in an easily digestible form. As readers will commonly scan from left to right, the first panel should contain the key “focus” information that you wish to convey. In the first case above the aim of the first panel is to draw attention to the fact that the data is from South East Asia. In the second panel the aim is to draw attention to the marine species as the key to interpreting what the data is about. Note that attention may be required to issues such as the size of fonts and the number of panels in communicating results to the reader.

Tableau was a pioneer in the use of dashboards, but many other software providers now offer variants of dashboards and it is possible to write dashboards in code using R (for example flexdashboards) and in Python (for example using Dash from Plotly) among others. While Tableau Public and Tableau Desktop provide easy options for creating interactive versions of dashboards (based on SQL joins between shared fields), programming languages provide for much greater customisation and flexibility. It is also possible to have the best of both worlds, for example by using Tableau for fast exploratory prototyping and then writing your own code, or by using extensions such as the R Shiny Tableau extension.

One issue with visualisations of data in this way is that they are vertical. We do not see the relationships between entities in the data when in practice scientific research is commonly conducted as part of networks of collaboration on different levels. Network visualisations address this problem.

2.5.2 Network Visualisation

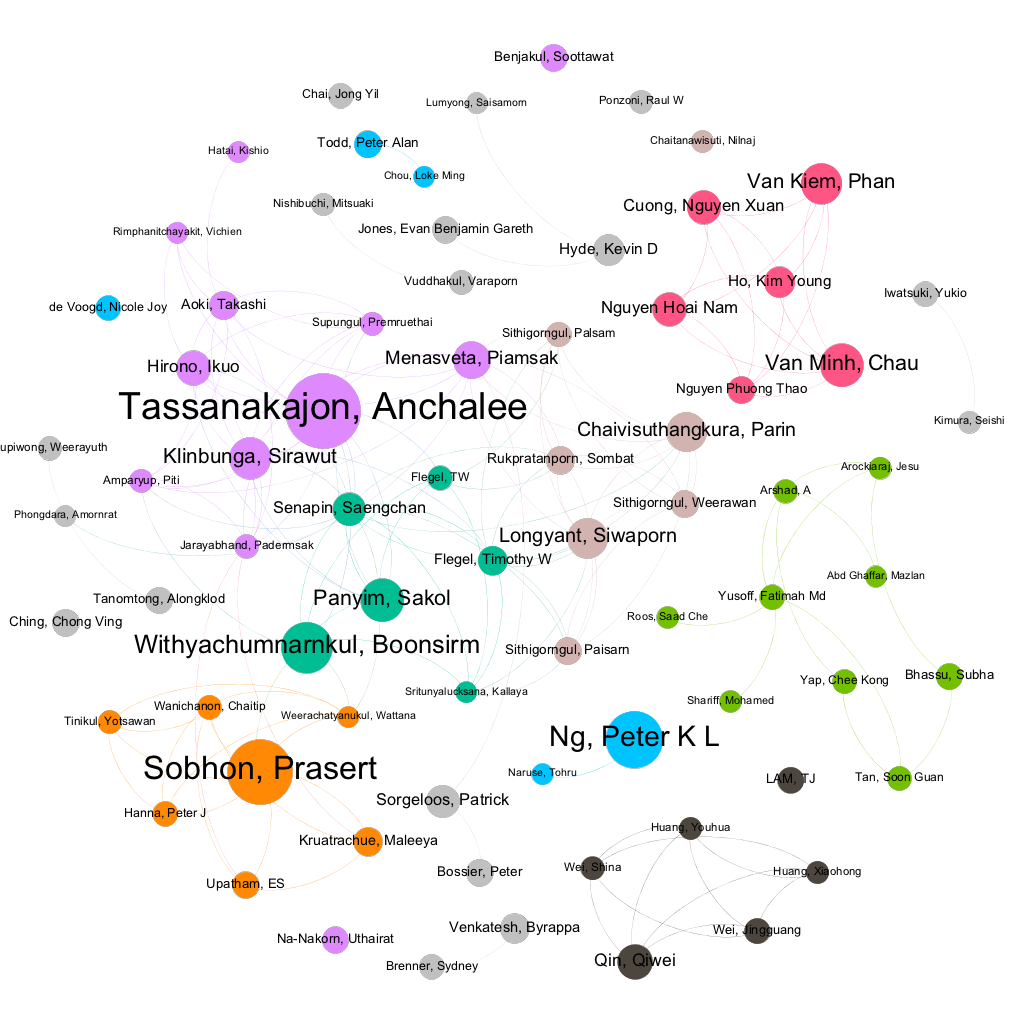

Figure 2.3 displays a network view of the relationships between authors involved in scientific research on marine genetic resources in South East Asia. The dots are sized based on the number of publications associated with an author. The lines or edges represent co-authored publications. The network has been limited to display authors with 20 or more publications.

Figure 2.3: Research Networks for researchers with 20 or more publications on marine genetic resources

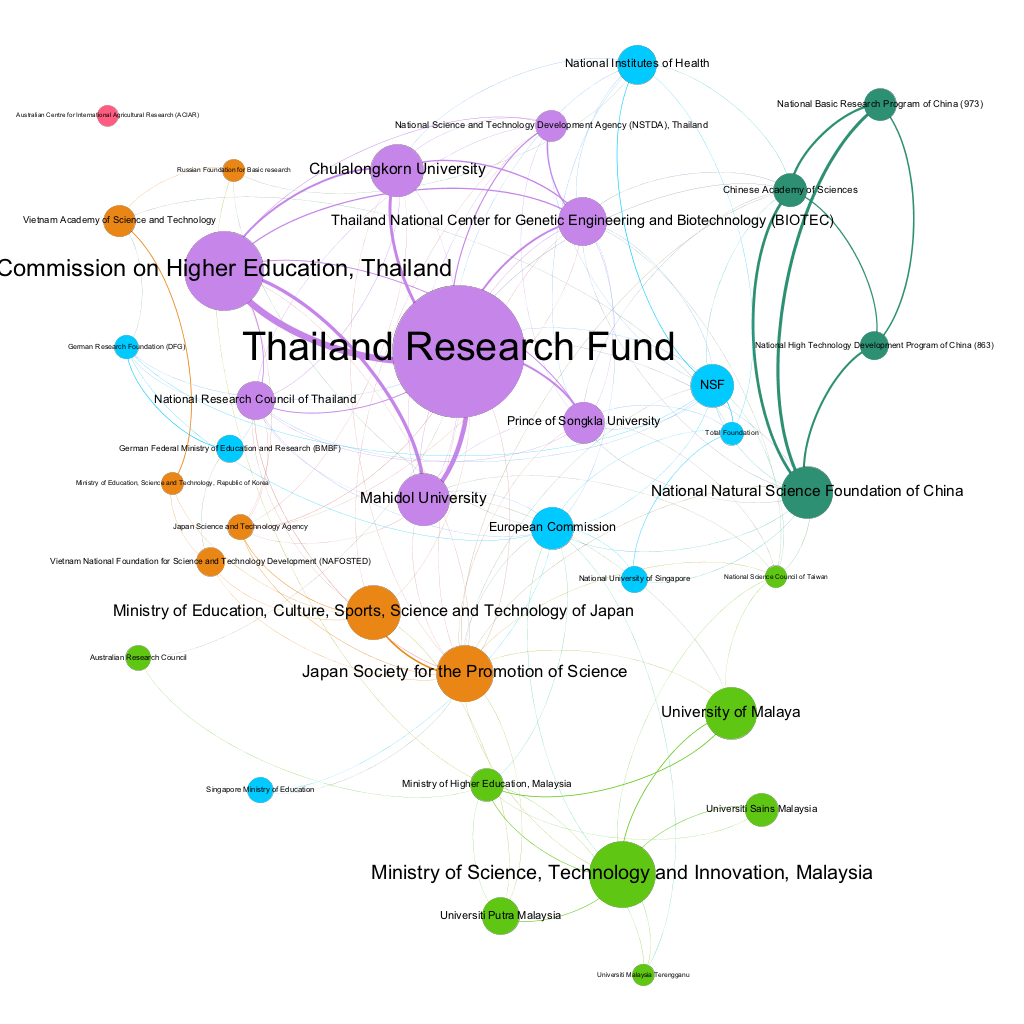

These network images are important because they display relationships that are difficult to see in any other way. A particularly good example of this is networks of funding organisations as set out in Figure 2.4. Note that in Figure 2.4 the size of the dots represents the number of publications where the funding agency appears in the acknowledgements ad does not reflect the size of financial investments. The lines represent publications where different funding agencies appear in the acknowledgements.

Figure 2.4: Network of Funding Organisations Supporting Research on Marine Genetic Resources

The full extent of network relationships is typically invisible to network participants. This is particularly true for networks of funding organisations. However, network visualisation is a powerful tool for engagement with researchers and audiences interested in a particular subject. The network visualisations presented above were created using the free Gephi software and a practical guide to creating these networks is provided in Chapter 10 of the WIPO Manual on Open Source Patent Analytics.

2.5.3 Other forms of visualisation

Data visualisation has advanced rapidly in recent years and the D3 JavaScript library has been responsible for a virtual explosion in creativity with interactive graphics. Examples of visualisation possibilities can be viewed in the D3 gallery on Github https://github.com/d3/d3/wiki/Gallery. One among other possibly fruitful options for data visualization is the Sankey diagram a form of dendrogram that aims to display the flow of energy between entities. Figure 2.5 displays the flow of research publications on a marine species in South East Asia into journals by subject area.

Figure 2.5: A Sankey Diagram showing flows of research on marine genera into journals by subject areas

This type of visualisation serves the useful purpose of showing the flow of research effort represented by publications as outputs into different subject areas. A particular strength of this visualisation is that we can see the proportion of research on a particular genus of marine organisms such as prawns in the genus Penaeus such as the Giant Tiger Prawn into journals on particular subjects. For Penaeus, a major focus of aquaculture in South East Asia, we can see that the flow of research energy is channeled towards fisheries, marine and freshwater biology and Veterinary Sciences (to address diseases with an effect on this commercially important genus such as viruses in the genus Vibrio). When viewed online this diagram is interactive and will highlight flows from a particular genus to a subject area.

The ability to create Sankey diagrams depends in a large measure on a willingness to engage with a programming language such as Javascript, R or Python that provide libraries to make the calculations and generate the Javascript diagrams. Thus the diagram above was generated with the D3network package in R. However, a number of online services offer the ability to create Sankey diagrams and these may meet your needs.

2.6 Linking the Scientific Literature with Patent Analysis

Analysis of the scientific literature is important because it allows us to understand the landscape of research for a particular topic. In the case of drone technology we saw that exploratory searches could assist in identifying key words for the construction of more refined search strategies and to progressively exclude noise from the results. In the data we have presented above on research on marine genetic resources in South East Asia we processed the data to answer the following fundamental questions:

- Who (and with whom?)

- What

- Where

- When

- How

These are standard questions in empirical research. The final question requires detailed attention to the literature itself in terms of understanding the precise subject matter of research by a particular individual or a research team. However, this type of landscape analysis allows us to investigate whether research has the potential to be transformed into a commercial product, method or process and therefore brings us to the patent and wider intellectual property system.

This type of research can be useful on a range of different levels:

- universities may be interested in identifying research outputs that may have potential to turn into useful products, methods or processes

- companies active in particular sectors may be seeking to develop new products and are seeking to identify relevant existing research

- Funding agencies may be seeking to understand the existing outcomes of research investments and to identify relevant areas of priority research that promise to result in new and useful products.

In many cases analysis of the scientific landscape will take place at a lower level than the ten countries covered by the research on South East Asian countries covered above. However, this example illustrates the possibility of using these methods and approaches to answer empirical questions at scale and then to drill down into the fine grained detail of research.

One major question that arises here is how to link together research on the scientific literature with research in the patent system. There are two main answers to this.

- To use keywords and phrases identified in research from the scientific literature as the basis for searches of the patent literature.

This is likely to be the most common approach. As discussed above access to sections of the literature such as titles, abstracts and author keywords allows for the application of basic text mining approaches to breaking texts into words and phrases. This in turn allows for the literature to be classified and refined to identify targets of interest. In software such as VantagePoint this is commonly done by sorting the data into groups. For example, in the case of drone technology one important area of research focuses on sensors while another separate area of research focuses on wireless devices to supply power to a drone while a third focuses on devices such as headsets and other devices for controlling a drone in flight.

- To focus on identifying individual researchers who are active in a research field who are also active in the patent system

This approach to linking scientific research with patent data is rarer for the straightforward reason that it is much harder to do at scale than an approach using keywords. However, it has the advantage of providing a clearer view of researchers who are already active in commercial research and development with a high degree of precision.

2.7 Linking Citations with Patent Literature

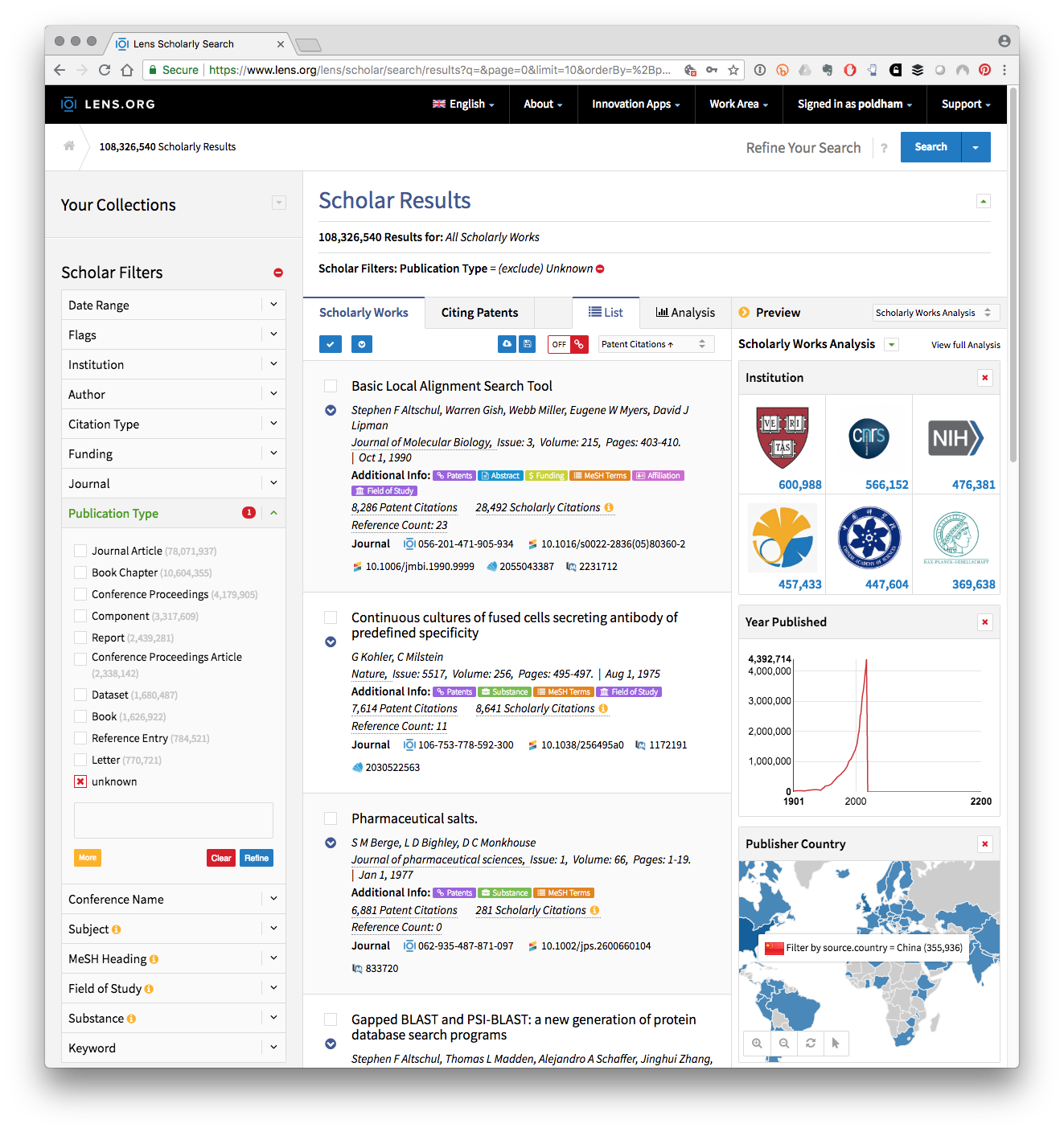

An alternative way to think about the relationship between the scientific literature and patent activity is to focus on non-patent literature citations (Callaert, Van Looy, et al. 2006). In a recent development the open access Lens patent database has done extensive work to link document identifiers in the non-patent literature to the Crossref database of metadata on over 96 million publications and to link records with PubMed and Microsoft Academic. The effect is to create a bridge based on identifiers between the scientific and the patent literature. Figure 2.6

Figure 2.6: Literature Citations Linking to Patent Citations and to external data sources in the Lens

This figure shows the top ranking literature citation across the data for the well known Basic Local Alignment Search Tool or BLAST that is widely used in fields such as genomics. Each entry links to a summary table include Medical Subject Headings (MeSH) terms where appropriate and a Citations page that will reveal Patent citations and Literature citations. Registered users, registration is free, can store and then export the results. Table 2.5 presents a sample of fields from the top 5 of the 9000 exported results from a search of Lens Scholar for “synthetic biology”.

| Title | Referenced by Patent Count | Publication Year | Citation IDs | Author/s |

|---|---|---|---|---|

| Accurate multiplex gene synthesis from programmable DNA microchips. | 188 | 2004 | (magid) mag2027912527; (doi) 10.1038/nature03151; (pmid) 15616567 | Jingdong Tian; Hui Gong; Nijing Sheng; Xiaochuan Zhou; Erdogan Gulari; Xiaolian Gao; George M. Church |

| De novo biosynthetic pathways: rational design of microbial chemical factories. | 91 | 2008 | (pmid) 18725289; (magid) mag2092471565; (doi) 10.1016/j.copbio.2008.07.009 | Kristala L. J. Prather; Collin H. Martin |

| Harnessing homologous recombination in vitro to generate recombinant DNA via SLIC. | 81 | 2007 | (doi) 10.1038/nmeth1010; (pmid) 17293868; (magid) mag2102440675 | Mamie Z. Li; Stephen J. Elledge |

| Microfluidic PicoArray synthesis of oligodeoxynucleotides and simultaneous assembling of multiple DNA sequences | 65 | 2004 | (pmcid) pmc524290; (pmid) 15477391; (magid) mag2146545072; (doi) 10.1093/nar/gkh879 | Xiaochuan Zhou; Shi-Ying Cai; Ailing Hong; Qimin You; Peilin Yu; Nijing Sheng; Onnop Srivannavit; Seema Muranjan; Jean Marie Rouillard; Yongmei Xia; Xiaolin Zhang; Qin Xiang; Renuka Ganesh; Qi Zhu; Anna Matejko; Erdogan Gulari; Xiaolian Gao |

| Gene Designer: a synthetic biology tool for constructing artificial DNA segments | 59 | 2006 | (pmcid) pmc1523223; (pmid) 16756672; (magid) mag1760665500; (doi) 10.1186/1471-2105-7-285 | Alan Villalobos; Jon E. Ness; Claes Gustafsson; Jeremy Minshull; Sridhar Govindarajan |

As this suggests, growing trends towards the federation of the scientific and patent literature present important opportunities for designing search strategies and more flexible approaches to the analysis and communication of results building on both the scientific and the patent literature.

2.8 Conclusion

This chapter has focus on methods for working with data from the scientific literature, using analysis of the scientific literature to build up a search strategy, the development of a scientific landscape study and methods for linking scientific literature to patent analytics.

It is important to emphasise that a major transition is taking place in the availability of the scientific literature and our ability to link scientific literature to patent data. What may be described as traditional databases that focus on closed subscription based access with severe limitations on data downloads are being replaced by large open access databases such as Microsoft Academic Graph, Crossref, PubMed, core.ac.uk and the Lens. These databases typically offer access to data at scale for free or use freemium subscription models for higher levels of access or the use of APIs while maintaining a generous free tier. How these business models will work out over the longer term remains to be seen. However, in practice the major constraint in accessing scientific publications, notably meta data about publications, is no longer the cost and download limitations placed on users by commercial providers. Rather, the main constraint is the capacity to manage and process data at the level of hundreds of millions of records.

In many cases patent analysts will not need to process hundreds of millions of records. Rather, what will be of interest is identifying the relationship between scientific literature and patent data through the exploration of citations. The Lens database has pioneered the integration of citations between the scientific literature and patent data and developments in the open source community such as the PATCIT will further open up the linkages between patent activity and underlying research activity.

A key feature of these emerging developments is the increasing application of machine learning approaches to the scientific and patent literature. We turn to these developments in Chapter 7.

References

Jeffrey Friedl, Source of the famous “Now you have two problems” quote http://regex.info/blog/2006-09-15/247↩︎

See for example the

Basic Regular Expressions in Rcheat sheet by Ian Kopacka available from RStudio↩︎This example is adapted from the Wikipedia entry at https://en.wikipedia.org/wiki/Precision_and_recall↩︎

The workshop live stream archive is available at https://live stream.com/uspto/PatentsViewInventorWorkshop↩︎